Cooling Technology

The era of data center air cooling is dead. Overstatement? No, I don't think it is. If you haven't visited it yet, take a look at our analysis of system power trends (LINK) in the wake of the rush to AI. Here's a quick review:

TDPs for performance oriented CPUs are in the 300-350 watt range today. In the next year or so, both AMD and Intel will have top SKUs with TDPs of 500 watts. Today's DDR5 high-end memory is also power hungry with server-grade 64GB and 128GB RDIMMs consuming 10 and 15 watts respectively. Doing the math: 1,000 watts for dual CPUs, plus 320 (or 480) for memory, plus another 100 watts or so for network cards, local storage, etc., adds up to 1,420 watts or so for a 2U server. Put 20 of them in a standard rack and you have a 28kW heater - and that's before adding any accelerators.

With AI functionality being added to many applications, there will have to be accelerators, which today are 700 to 1,200 watts each. Tomorrow will bring accelerators above 1,000 watts. Conservatively figure that 1/3 of the nodes in your rack (assuming seven) will need at least two GPUs (some will need four or more), which gives us 7 x 2,820 (two-way server plus dual 700 watt GPUs) = 19,740 watts plus 13 x 1,440 (non GPU servers) for a grand total of 38kW per rack. This is nearly 3x the heat output of the average rack today, not that anyone knows that for sure, but it's reasonably close.

This is why you won't be able to exclusively rely on air cooling anymore. You will have to move to liquid cooling in some form or other. Alternatively, you could outsource a bunch of your IT to a third party or a cloud, but there are very significant cost, flexibility, and other concerns that need to be rigorously examined. That said, let's take a look at liquid cooling technology, how it works in general, and then lay out technology choices.

Liquid Cooling Big Picture Overview

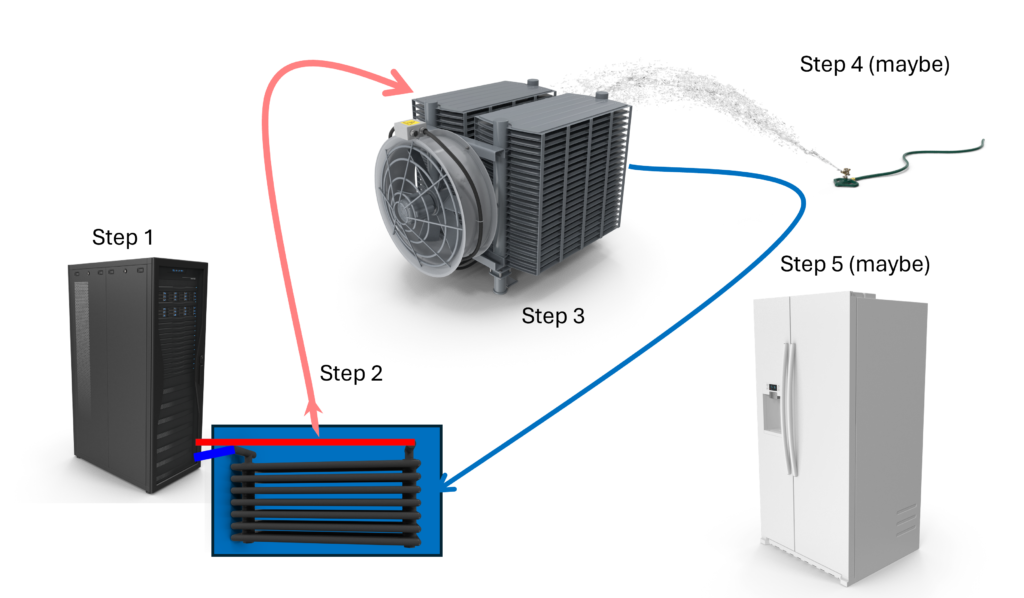

The stunningly artistic picture below illustrates how liquid cooling works in general. There are some variations, which we'll go into a little later, but this is a fantastic depiction of the components and process. (Note: None of the images below are to scale or, in at least one case, even realistic. Hard to believe I did this myself in PowerPoint, right?)

Step 1: Pumps circulate liquid through each node in the rack in a closed loop. With immersion cooling, this would be the immersion enclosure, we'll show some pictures later on. The liquid circulating inside the rack isn't strictly water, it's at least water with some additives to aid cooling, but in some cases a synthetic liquid with un-water-like properties.

Step 2: This is a conceptual, and poorly executed, view of a CDU (Coolant Distribution Unit). It's a liquid-to-liquid heat exchanger where cool facility water flows through (the blue area), picks up heat from the server liquid loop, and transports it out of the data center. Important notes:

- First, one CDU can handle many racks, it's typically not a one rack to one CDU relationship.

- CDUs come in a wide variety of form factors and capacities. Some are at the end of rows with piping running under or over the racks. Others are entirely under a raised floor.

- CDUs all have instrumentation monitoring and controlling liquid flows and temperatures.

Step 3: Now we're up on the roof or somewhere else outside the building. The now warm water leaves the CDU(s) and is pumped through a radiator where high velocity air cools the water, thus removing the collected heat from the systems. This unit is generally called a "dry cooler" or "cooling tower" or something along those lines. Important notes:

- This is 'free cooling' since the fans and pumps use far less power than chillers/condensers. When your outside air is colder than the temperature you need for your facility water loop, then a dry cooler is a great solution.

- The temperature of the liquid in the facility water cooling loop doesn't have to be all that cold. ASHRAE and others say it can be as warm as 89F (31C) and still provide enough cooling capability for systems. Unless your outside ambient temperatures are pretty high, you will get serious benefits from a dry cooler.

- Variable speed fans will increase dry cooler energy efficiency.

- However, the temperature you need for your facility water depends on the number of systems you're trying to cool and their configs. As TDPs on CPUs rise above 500 watts and on GPUs above 700 watts, the water coming out of the cooling tower and heading to the systems will need to be closer to 80F (26.6C).

Step 4: In our simple (and bad) illustration above, the lawn sprinkler represents Adiabatic Cooling, which adds more cooling punch to your dry cooler. Imagine that the radiator in Step 3 above is surrounded by wet porous material. As the fan draw in air, it goes through this material and is pre-cooled and humidified. Cooler and more humid air will carry significantly more heat away as it is pushed through the facility water radiator. This is a great solution in hot, dry, climates and in places where the rooftop ambient temperatures are very high. Important notes:

- Water is only used on the dry tower when it's needed, and it's not a lot of water, a little bit goes a long way when it comes to pre-cooling air and adding humidity.

- Adiabatic coolers can be smaller than dry coolers because of the performance they can provide. This makes them an easier fit and takes better advantage of roof top real estate.

- You have to factor in not only energy costs, but also water consumption and waste water costs, depending on climate, scale, usage and other factors, the water costs can be significant.

Step 5: The refrigerator in the picture represents traditional water chillers, which have long been used to drop liquid temperatures for data centers and a wide variety of other industrial needs. They use compressors, condensers, and refrigerant to remove heat and return cooler liquid to the facility loop - which is a pretty energy intensive process when compared to a dry cooler and adiabatic cooling. Important notes:

- Chillers are quite a bit more complicated, and thus more expensive to purchase than dry coolers and dry coolers plus adiabatic coolers.

- Operating costs for chillers are higher than for the others too. The refrigeration is a much more energy intensive process.

- With dry coolers and, if needed, adiabatic add-ons, the load on your chillers will be much lower, meaning lower costs.

- However, even with all of the above, there is still a place for chillers, but as a failsafe, a last resort source of cooling.

Liquid Cooling - Choices, Choices, Choices

In our big picture illustration above, we sort of 'assumed' some cooling inside the server and had it bringing in cool liquid and pushing out war liquid. Now we're going to take a look inside and discuss what's under the covers.

Primary

Business

Company

Cooling

Tech

Product Types or Range

HQ Location

/Coverage

Founded/

Employees

Cooling

Specialist

DLC

| Cold plates, modular enclosures, radiators, pumps, manufacturer, customization |

Germany, EU

Worldwide

2004

400

Cooling

Specialist

DLC

| CDU, cold plates, rack/server manifolds, negative pressure, water quality control |

California, US

Worldwide

2011

??

Cooling

Specialist

DLC, RDHx

CDUs, cold plates, manifolds, SFN, services, rear door heat exchangers

Calgary, Canada

Worldwide

2001

248

Cooling

Specialist

DLC and

Immersion

CDUs, Cold plates (DLC), immersion enclosures (multiple size, stacking), manifolds, dry coolers, custom full solutions

Warsaw, Poland

Worldwide

2016

???

Cooling

Specialist

Hybrid

Liquid Cooling

Liquid cooled rack enclosure, modular approach, retrofit or new installations, no modifications to existing equipment

California, US

Worldwide

2011

??

Cooling

Specialist

DLC

2-Phase

2 phase cooling, cold plates, pumps, manifolds, fluid, management/monitoring

California, US

Worldwide

2016

??